Roblox chat safety issues have been a growing concern as the platform continues to expand its social features and global user base. Roblox is designed to be a creative and social environment, allowing players to communicate through text chat, voice chat, and in-game messaging. While these tools help players collaborate and make friends, they also introduce risks related to inappropriate language, harassment, scams, and unsafe interactions.

Over the years, Roblox has invested heavily in moderation systems, automated filters, and community reporting tools to keep chat safe, especially for younger users. However, no system is perfect. Players often report problems such as over-filtering, missed harmful messages, false moderation actions, or confusion about what is allowed. Parents and developers also express concerns about how chat features are monitored and enforced.

This blog post explains the main Roblox chat safety issues, why they still exist despite moderation efforts, how Roblox handles chat safety in 2026, and what players, parents, and creators can do to reduce risks and create a safer experience.

How Roblox Chat Works

Roblox chat allows players to communicate in several ways, including in-game text chat, private messages, group chats, and voice chat for eligible users. Each chat type is governed by Roblox community standards and filtered through automated moderation systems.

Text chat uses real-time filtering that scans messages before they appear. Voice chat relies on a mix of behavior monitoring, user reports, and account-level restrictions. Private messages and group chats follow similar safety rules but may feel more personal, which is why moderation plays an important role in preventing misuse.

Common Roblox Chat Safety Issues

Inappropriate Language Slipping Through

One of the most reported issues is inappropriate or suggestive language appearing in chat. Although Roblox uses advanced filtering, some users find ways to bypass filters using spelling tricks, coded language, or context-based phrases.

Over-Filtering of Normal Messages

At the same time, many players complain that harmless words, jokes, or normal conversation get blocked. This happens when automated filters interpret messages too conservatively, especially when context is unclear.

Harassment and Toxic Behavior

Some players experience bullying, harassment, or repeated negative messages in public servers or competitive games. While reporting tools exist, harmful interactions can still occur before moderation action is taken.

Scam and Phishing Attempts

Scammers sometimes use chat to trick players into clicking fake links, offering free items, or asking for account details. Younger or inexperienced players are particularly vulnerable to these tactics.

Voice Chat Concerns

Voice chat introduces additional safety challenges. Players may encounter inappropriate language, background noise, or behavior that is harder to moderate in real time compared to text chat.

Why Chat Safety Issues Still Exist

Despite ongoing improvements, chat safety issues persist for several reasons.

Roblox hosts millions of players communicating in real time, making it impossible for human moderators to monitor everything instantly. Automated systems are fast but imperfect, sometimes missing harmful content or blocking safe messages.

Cultural and language differences also play a role. Words that are harmless in one region may be offensive or risky in another, making global moderation complex.

Finally, some users intentionally test moderation limits, constantly adapting their behavior to bypass filters. This creates an ongoing cycle where moderation systems must evolve continuously.

How Roblox Moderates Chat in 2026

In 2026, Roblox uses a layered approach to chat safety.

Automated filters scan text messages before they appear, blocking known harmful words and phrases. Machine-learning models attempt to understand context, but they still prioritize safety over flexibility.

User reporting tools allow players to flag messages or behavior they believe violate rules. These reports help moderators review cases more efficiently.

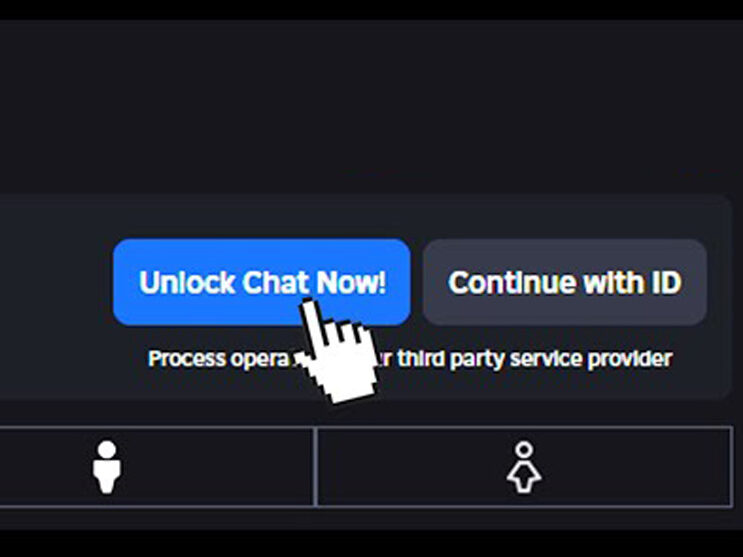

Account-level enforcement tracks repeat offenders. Players who frequently violate chat rules may face temporary chat restrictions, account suspensions, or permanent bans.

For voice chat, Roblox relies on eligibility requirements, behavior tracking, and post-incident review rather than real-time transcription in most cases.

Impact on Players

For many players, chat moderation creates a safer and more welcoming environment. Younger users benefit from reduced exposure to harmful language and scams.

However, stricter moderation can also feel frustrating. Players may struggle to communicate normally when messages are filtered unexpectedly. Competitive players may feel that reporting systems are abused during heated matches.

Understanding how chat moderation works helps players adjust expectations and communicate more clearly.

Impact on Parents and Guardians

Parents often worry about who their children interact with online. Chat safety issues highlight the importance of parental controls, privacy settings, and open communication.

Roblox provides tools that allow parents to limit chat access, disable private messages, and control who can communicate with their child. These settings are especially useful for younger players who may not recognize unsafe behavior.

What Players Can Do to Stay Safe

Staying safe on Roblox chat starts with awareness. Players should avoid sharing personal information, clicking unknown links, or responding to suspicious offers.

Using respectful and clear language reduces the chance of moderation issues. If harassment occurs, muting or reporting the user is more effective than engaging.

Understanding that filters are designed for safety helps players adapt without frustration.

What Developers and Group Owners Should Know

Developers and group admins play a role in chat safety within their communities. Clear rules, active moderation, and educating players about acceptable behavior can significantly reduce issues.

Games with large communities benefit from reporting guidelines and visible enforcement, which discourages toxic behavior and misuse of chat systems.

The Balance Between Safety and Freedom

Roblox chat safety issues reflect a larger challenge faced by many online platforms: balancing free communication with user protection. Over-filtering can limit expression, while under-filtering can expose users to harm.

Roblox continues to adjust this balance through system updates, feedback, and moderation changes. While no solution is perfect, gradual improvements aim to make chat safer without completely restricting social interaction.

FAQ

Why does Roblox block normal words in chat?

Automated filters sometimes block words due to context or safety rules, even if they seem harmless.

Is Roblox chat safe for kids?

Roblox chat includes safety systems, but parental controls and supervision are strongly recommended.

Can I turn off chat completely?

Yes, chat features can be limited or disabled through account and parental control settings.

How do I report unsafe chat messages?

Use the in-game reporting tools to flag messages or behavior that violate rules.

Does voice chat have moderation?

Yes, voice chat is moderated through reports, eligibility rules, and account enforcement.

Do chat reports actually work?

Reports help moderators identify repeat offenders and improve safety over time.

Roblox chat safety issues remain an important topic as the platform continues to grow and evolve. While moderation systems in 2026 are more advanced than ever, challenges like over-filtering, harassment, scams, and voice chat risks still exist. By understanding how chat moderation works, using available safety tools, and practicing responsible communication, players and parents can reduce risks significantly. Chat is a powerful feature when used safely, and ongoing improvements aim to make Roblox a more secure and enjoyable social space for everyone.